Is AI making the same mistakes as us?

I watched the Netflix documentary CodedBias last weekend. And it raised some rather good points about AI development. For those of you who haven’t yet seen the film, it tells the story of a group of inspiring women fighting against the injustices of AI. The documentary feels like a natural follow-up to the film SocialDilemma. And, if you were horrified by the practices uncovered in it, I strongly recommend you also watch CodedBias!

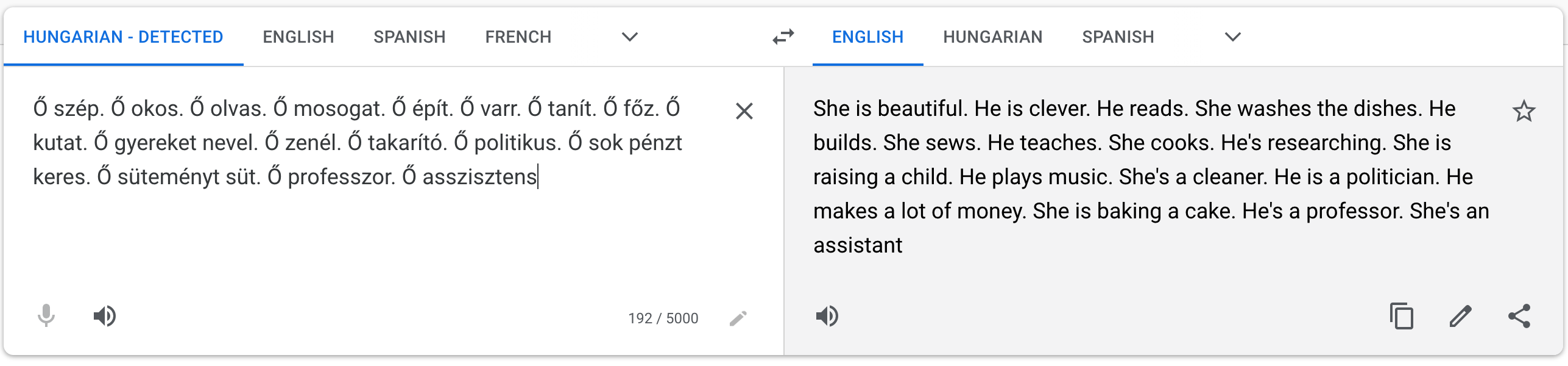

Rather than exploring Terminator-style takeover scenarios by a super-intelligent AI, CodedBias takes a hard look at the real problems created by AI technologies today. It does a great job at highlighting the issue of bias in AI, and how that adversely affects whole demographics. The point is most strikingly illustrated by the example of a recidivism algorithm discriminating against Black defendants. We don’t need to look very far either to find instances of bias in AI beyond those brought up in the documentary. Even Google Translate, which is a fairly innocent looking service for the public benefit, can exhibit biases (see the image below). Ultimately, bias in AI is a real issue. And as the consumers and subjects of these algorithms, this should worry us all.

An example of AI bias when translating from a gender-neutral language, Hungarian, to English.1

An example of AI bias when translating from a gender-neutral language, Hungarian, to English.1

So, rightly, the documentary takes a look at the bigger picture of how these technologies are being used. Even if it was free of inherent bias, is AI-powered video surveillance really the way to go? Do we want to enable corporate powers to predict our behaviour with ever-increasing accuracy by giving them our data? These are questions that we, as a society, have to answer.

Is developing AI worth the risk?

Due to the risks outlined in CodedBias, one might be left wondering – is using AI worth it? The answer is an emphatic YES. This might come as a surprise to those of you who have seen CodedBias, since the documentary sacrifices this side of the discussion to achieve cohesive storytelling. In reality, the potential to do social good using AI is enormous. Even before we perfect self-driving cars, AI-based advanced driver-assistance systems will save human lives. AI advances in radiology will assist doctors in cancer diagnosis and allow the development of more personalised treatment plans. AI will speed up and improve drug discovery, giving us the chance to treat and cure more diseases. In agriculture, AI lets farmers monitor the health of their crops to an unprecedented level of accuracy, which enables more sustainable farming. And the list goes on.

The path forward - how to ensure unbiased AI?

The question is, how can we reap the benefits of AI while simultaneously eliminating the downsides? To solve this problem, CodedBias makes a case for the necessity of regulations. What we need is a sort of “FDA2 for algorithms”3. In a pledge, the makers of CodedBias demand that:

“… algorithmic systems be vetted for accuracy, bias, and non-discrimination, evaluated for harms and capacity for abuse, and subject to continuous scrutiny.”4

This is a call that I fully support! When AI algorithms are used to assess our teachers or determine our creditworthiness, when they impact human lives or society at any scale, we should expect them to be regulated. I am glad that efforts to create frameworks for compliance are already being developed by regulators in healthcare, aviation, automotive, and other industries. Though I must say, I don’t envy those regulators because they are facing an incredibly tough job. If they make the proposed regulations too lax, society will lose as a result. If regulations are made too strict, they won’t allow innovation and, again, society will lose. And if they end up being so complex that only the big players can follow them – you guessed it – society will lose. To walk this fine line of not too lax, strict, or complex regulations, lawmakers would benefit from developing them through short, use-case driven projects involving both companies of varying sizes and researchers. This way we have a good chance of avoiding the scenarios #CodedBias talks about.

Of course, regulations are not a silver bullet that will immediately solve all AI-related issues. And the problem is much too complex to be solved by a single stakeholder. Regulators can control the process and expectations, but they cannot solve the underlying technical difficulties. Researchers have a lot of work to do in investigating all the different ways in which bias can creep into AI algorithms. Engineers, on the other hand, carry the responsibility to build the necessary tools to not only detect bias but also ensure AI systems work as intended. Indeed, it will require the concerted effort of many, including society as a whole, to create truly trustworthy AI. But I’m optimistic that we will rise to the challenge. And in the meantime, I recommend that you watch CodedBias, in order to get a better understanding about the wider issues surrounding AI!

Thanks to Mateo, David, Lucy and Ina for reading and commenting on drafts of this article. Image credits to Niv Singer and unsplash. #safeai #LakeraAI